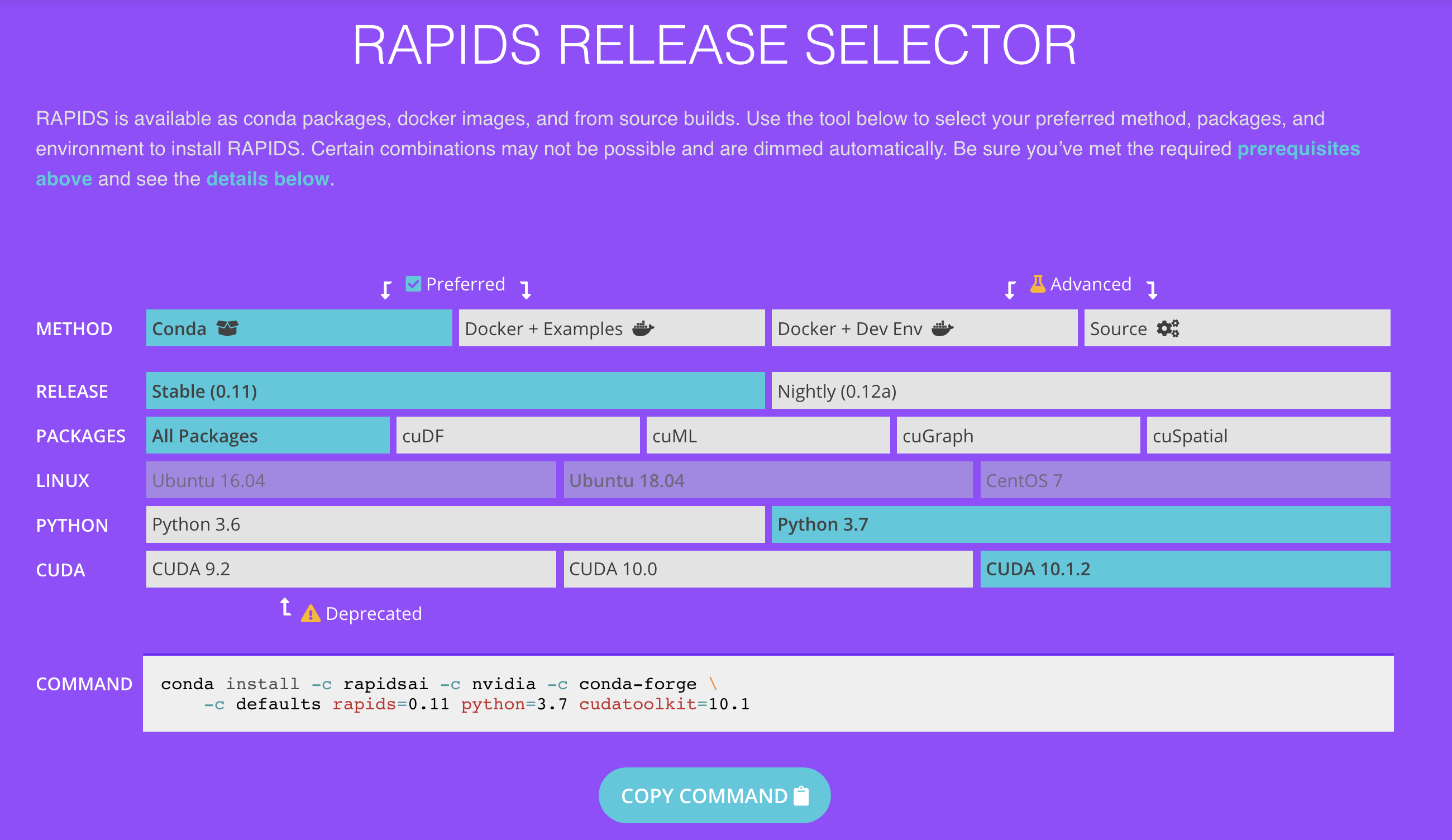

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

GitHub - kaustubhgupta/pandas-nvidia-rapids: This is a demonstration of running Pandas and machine learning operations on GPU using Nvidia Rapids

An Introduction to GPU DataFrames for Pandas Users - Data Science of the Day - NVIDIA Developer Forums